OAHU A.I.

A.I. in

Production:

Learnings & Findings

Join us for an evening dedicated to real-world AI applications!

NOVEMBER 6, 2025

EVENT COST: FREE

Food and drinks: sponsored by Flowing Blue llc

Entrepreneurs Sandbox

643 Ilalo St · Honolulu, HI

Featured Presenters

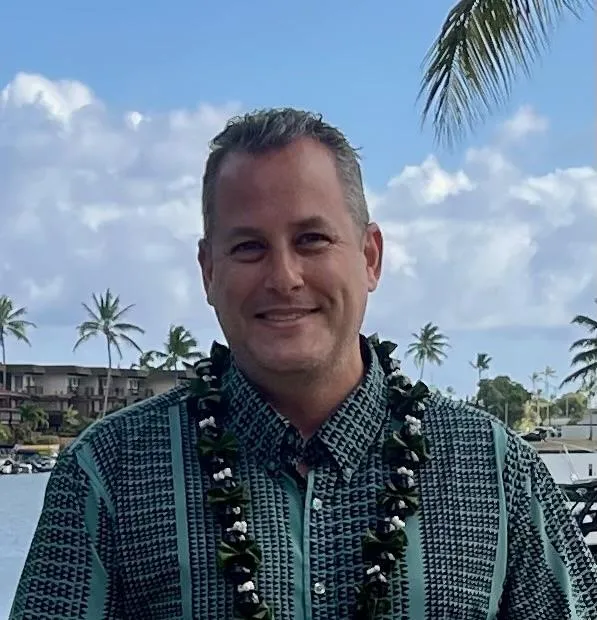

Scott Lichtenstein

When AI Picks Up the Phone: Making Technology Work for the Non-Tech Crowd

Most small business owners aren’t sitting around training models or writing prompts, they’re answering phones, serving customers, and trying not to miss sales. This talk explores how AI can be designed to meet them where they are, through tools that feel natural, solve real problems, and quietly deliver the power of AI without any tech know-how.

David Pickett

Fast, Small, Accurate(-ish): A 4-Bit LLM Tour with Qwen3-32B

A 10-minute, data-first comparison of 4-bit quantization formats for the Qwen3-32B model across three environments: Hybrid CPU/GPU, NVIDIA-only, and Apple Silicon.

Benchmarks focus on accuracy (LiveBench; possibly Aider Polyglot) and performance: prompt processing, time-to-first-token, steady token rate, and multi-request concurrency/throughput.

Formats covered (time permitting): GPU (mxfp4, nvfp4, AWQ-4bit, ExLlamaV3 4bpw), CPU/GPU via GGUF (Q4_0, Q4_K_M, UD‑Q4_K_XL, IQ4_NL), and Apple MLX (MLX 4‑bit, MLX 4‑bit DWQ).

Goal: provide practical rules of thumb on speed/accuracy/memory trade-offs and a simple decision path to choose the right 4-bit format for specific hardware and workloads. No hype—just results.

Ben Ward

Building Real-Time Conversational AI for Mobile: Architecture Lessons from ConvoLive

Deep dive into ConvoLive’s real-time conversational pipeline on React Native, orchestrating multiple AI services.

Components: OpenAI GPT with scenario-specific prompt engineering; Google Cloud TTS with streaming; 3D avatar rendering and lip sync.

Focus on engineering tactics: reducing end-to-end latency, optimizing token usage/costs, handling mobile constraints.

Concrete examples: generating dynamic language-learning scenarios and prompts.

Production lessons: rate limiting, offline/spotty connectivity strategies, multilingual TTS quirks (e.g., dialect/locale issues like “Spanish isn’t one Spanish”).

What to expect

Presentations on AI projects shipped to production

Discover essential tools, patterns, and vendors

Live Raspberry Pi + AI Demo!

Schedule

NOVEMBER 6, 2025

6:30 PM: Doors Open & Socialize

7:00 PM: Announcements, Presentations begin

8:00 PM: Presenters finish, Extended Socialization

8:30 PM: Doors close

More info on meetup.com

RSVP and Secure Your Free Admission

Access complete event information by scanning the code below.